Benchmark results for v0.14.0

We made benchmarks for LeoFS v0.14.0 on Apr 12th. Regrading test-environment is as the follows. We used typical spec of servers and CentOS 6.3. Also, LeoFS’s consistency level was the same as production settings.

Test Environment

| Item | Value |

|---|---|

| Hardware | |

| CPU | 8-core |

| RAM | 16GB |

| HDD | 7200rpm (Capacity:1TB) |

| Network | 10Gbps ethernet |

| OS/Middleware | |

| OS | Linux 2.6.32-279.22.1.el6.x86_64 |

| Erlang | Erlang R15B03-1 (erts-5.9.3.1) |

| LeoFS Cluster | |

| # of Benchmarker | 1 |

| # of LeoFS-Manager | 1 |

| # of LeoFS-Gateway | 2 |

| # of LeoFS-Storage | 5 |

| Consistency Level | |

| # of replicas | 3 |

| # of successful WRITE | 2 |

| # of successful GET | 1 |

| Prerequirements | |

| # of loaded objects | 100,000 |

| Stress Tool Settings (basho_bench’s configuration file) | |

| R:8 W:2, “exponential_bin” | |

Test Results

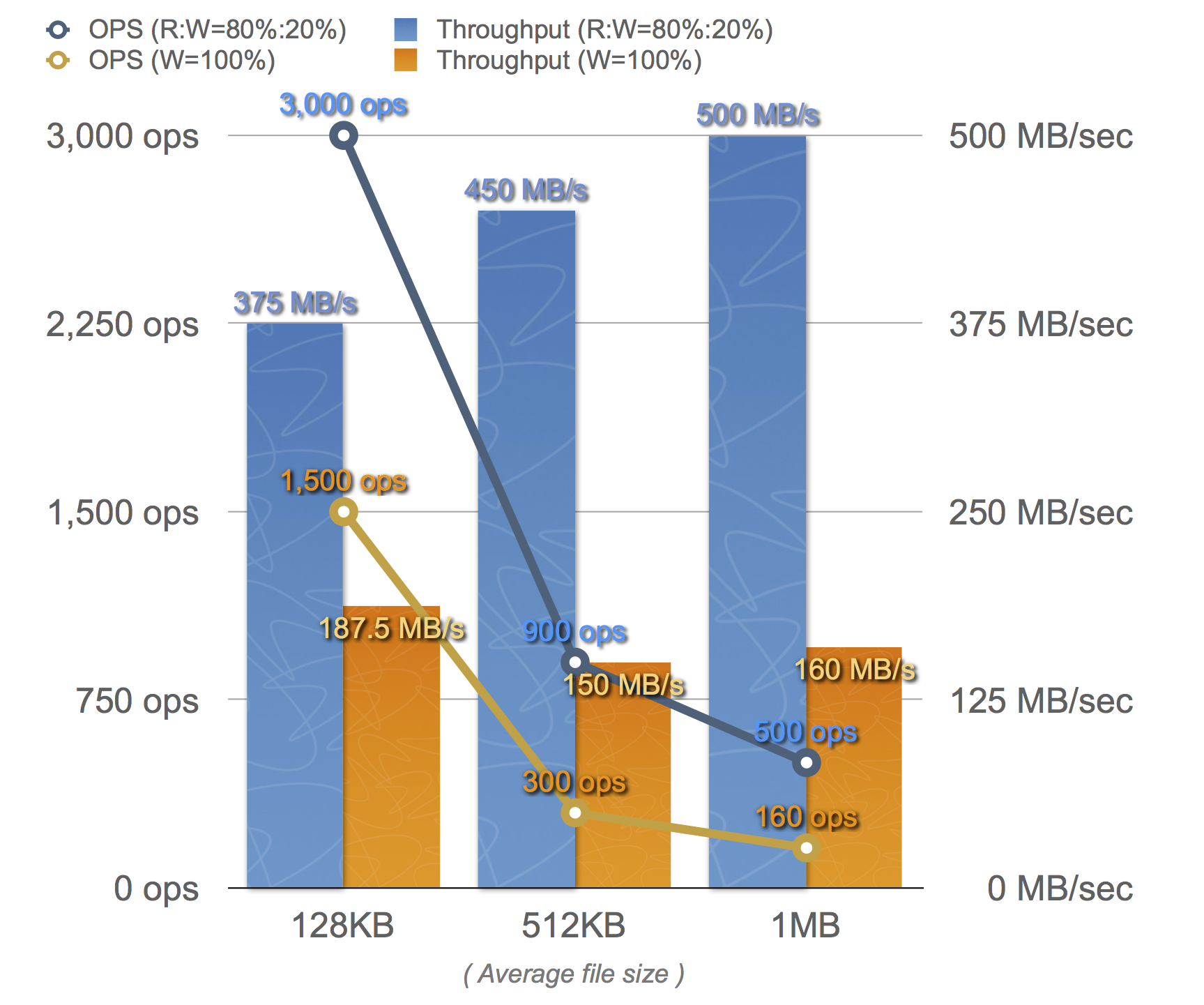

Test results is as the follows. From each benchmark, We found the bottle-neck was disc I/O. Also, retrieving of small file size (average 128KB) has the room for an improvement. So We have been improving Leo's object-cache lib from this week.

Detail benchmark configuration for average of 128KB (file size)

| Item | Value | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mode | Max | ||||||||||

| Duration | 1,000 sec | ||||||||||

| # of concurrents | 64 | ||||||||||

| Driver | basho_bench_driver_leofs | ||||||||||

| Misc |

|

Detail benchmark configuration for average of 512KB (file size)

| Item | Value | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mode | Max | ||||||||||

| Duration | 1,000 sec | ||||||||||

| # of concurrents | 64 | ||||||||||

| Driver | basho_bench_driver_leofs | ||||||||||

| Misc |

|

Detail benchmark configuration for average of 1MB (file size)

| Item | Value | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mode | Max | ||||||||||

| Duration | 1,000 sec | ||||||||||

| # of concurrents | 64 | ||||||||||

| Driver | basho_bench_driver_leofs | ||||||||||

| Misc |

|

We’re planning to make next benchmark for LeoFS v0.14.2-RC at the end of this month. Also, in the next-benchmarks, we will include large-file such as over size of 4MB.

We keep improving and growing LeoFS. Looking forward to the next-version.

LeoFS overview

Our Motivation

We found storage problems in our company, A lot of services depended on Expensive Storages which is stored any unstructured data such as images, documents and so on.

We should resolve 3-problems:

LowROI - Low budget services cannot pay when using expensive storages.

Possibility of SPOF - Depending on the budget, It is difficult to build redundant-structure with expensive products.

Storage expansion is difficult during increasing data - It cannot easily add (expand) an “Expensive Storage”.

Need to move from expensive storage to something.

Aim to ...

As the result of our try and error, we finally got satisfy our storage requirements, which are 3-things:

ONE-Huge storage - It’s so called Storage Platform.

Non-Stop storage - The storage-system is requested from a lot of web services, which require is always running.

Specialized in the Web - All web-services need to easily communicate with the storage-asystem, We decided that it provide not FUSE but REST-API over HTTP. Depending on specific storage, so it cannot definitely scale.

LeoFS Overview

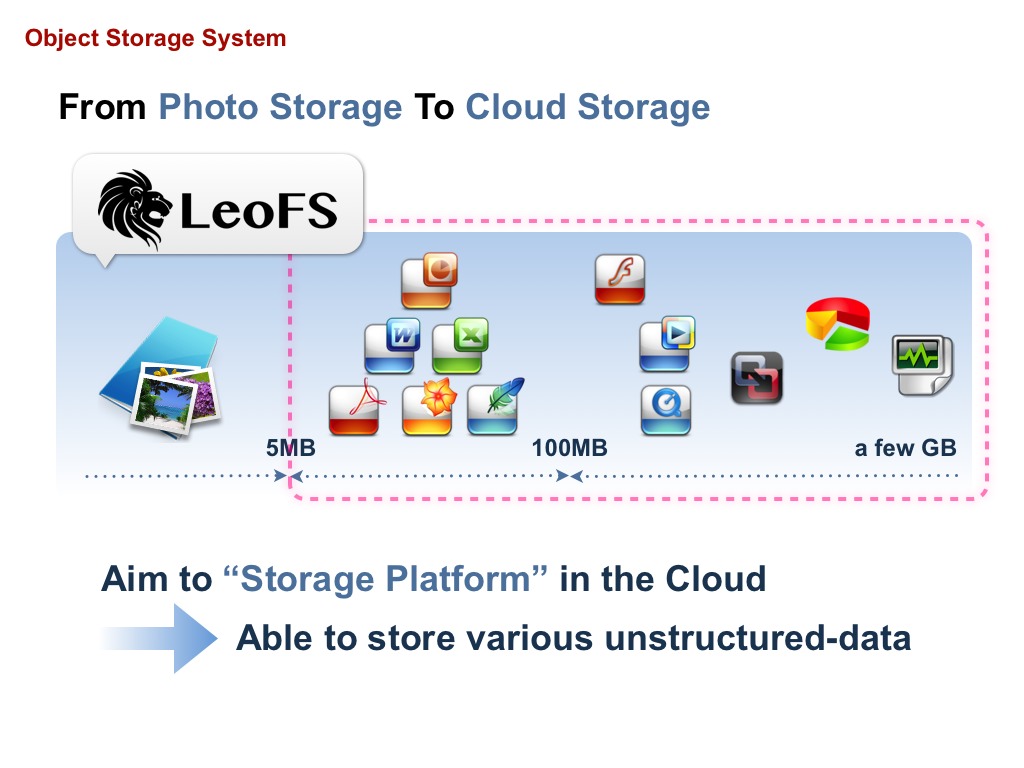

LeoFS is able to store various unstructured-data such as photo, document, movie, log-data, and so on. LeoFS already cover from small-size files to large-size files.

We aim to Storage Platform in the cloud.

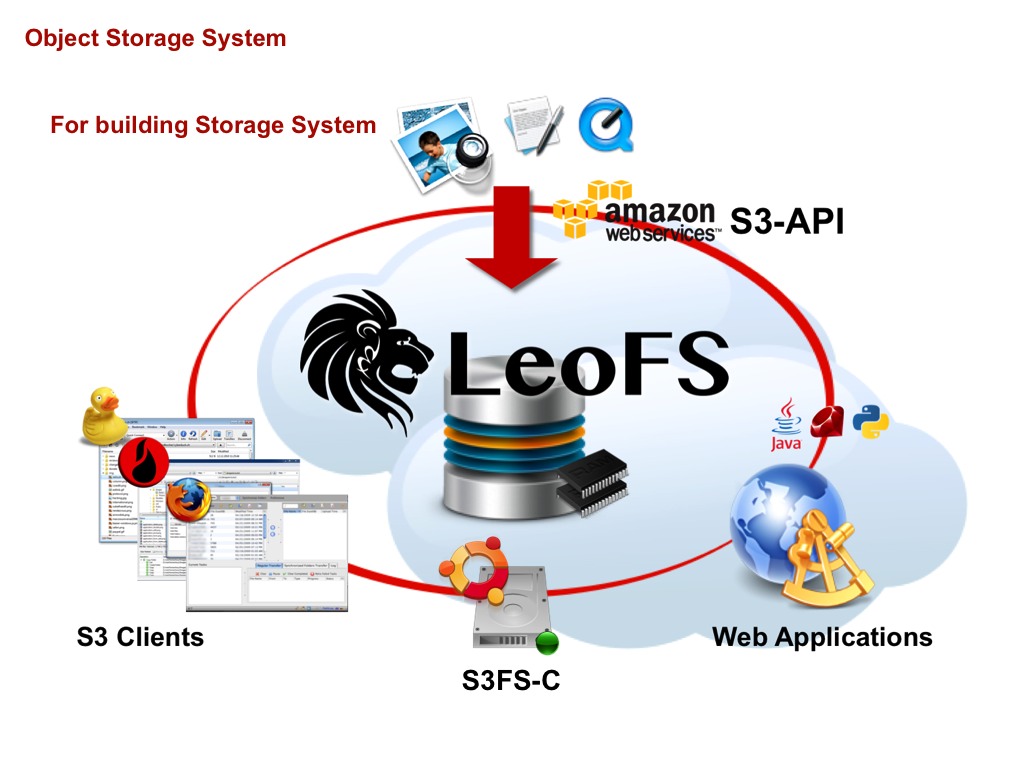

For building storage platform, What we’re going to do is provide S3-API. Because S3-API has provided any PG-lang clients, GUI clients, and so on. Also, they’re able to fluently communicate with LeoFS.

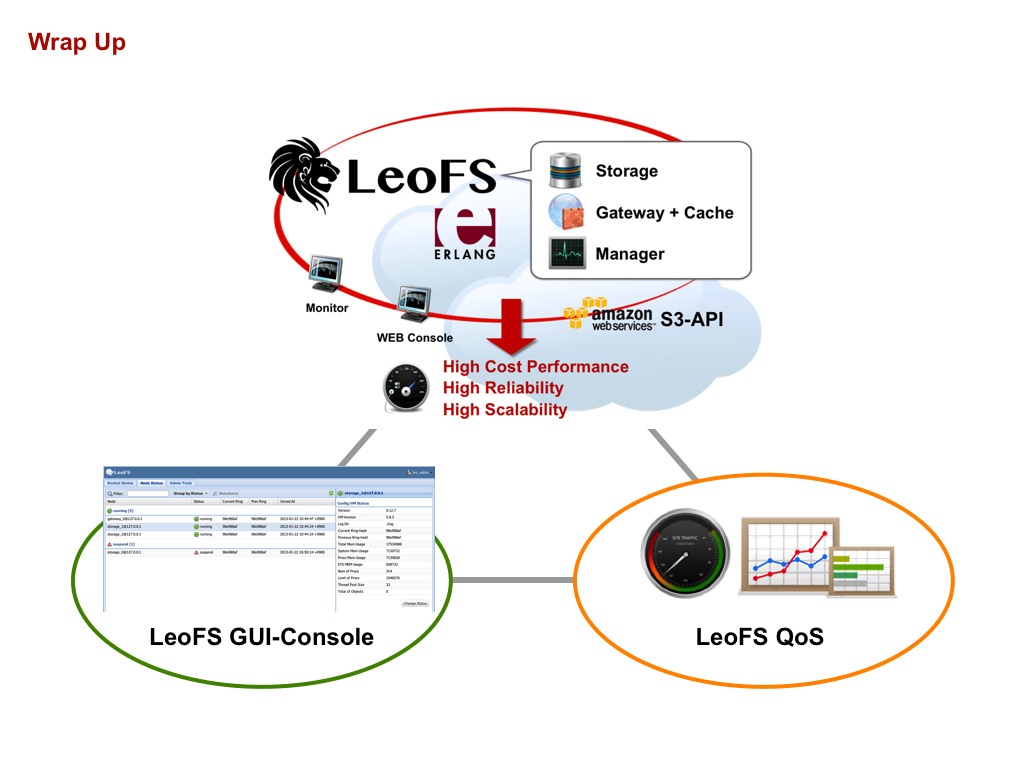

In centralized LeoFS, We have been building storage platform in our company. It needs 3-things:

- High cost performance ratio

- High reliability

- High scalability

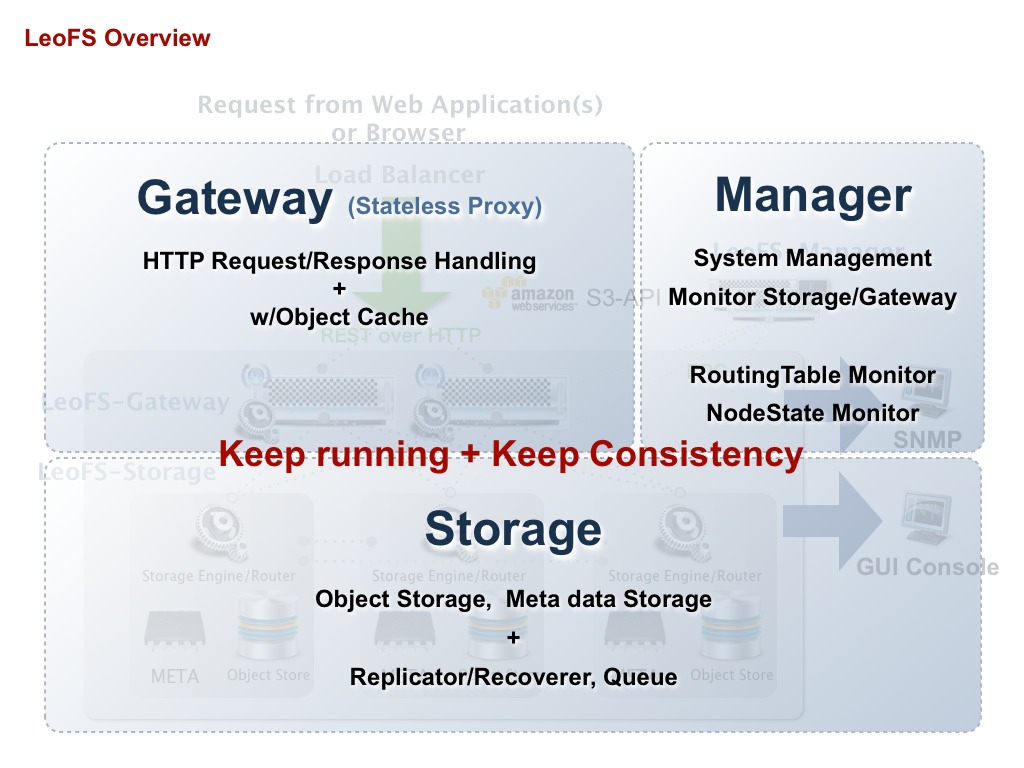

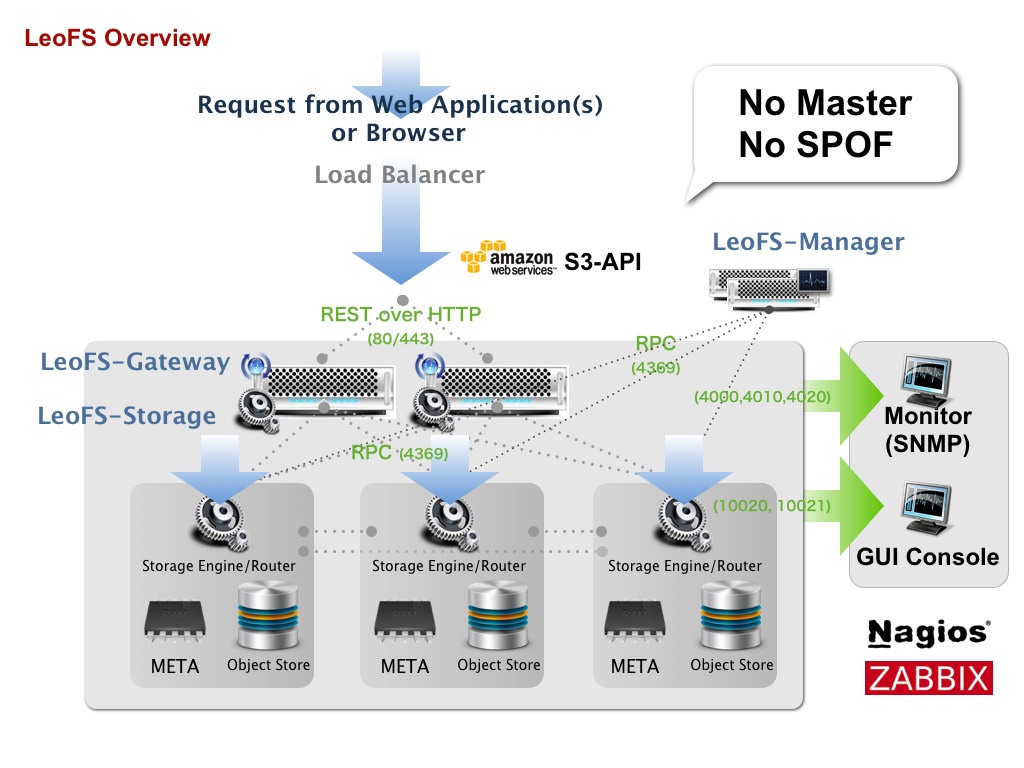

LeoFS consists of 3-functions - Storage, Gateway and Manager which depend on Erlang.

Gateway handle http-request/response from any clients when using REST-API OR S3-API and Gateway is already built in object-cache mechanism.

Storage handle GET, PUT and DELETE objects as well as metadata, Also Storage has replicator, recoverer and queueing mechanism for keep running and keep consistency.

Manager, which always monitors gateway-nodes and storage-nodes. Main monitoring status are node-status and RING’s checksum for keep running and keep consistency.

LeoFS’s system layout is very simple. LeoFS does not has master server, so there is NO-SPOF.

LeoFS already implement SNMP-Agent. You can easily monitor LeoFS with monitoring-tools such as Nagios and Zabbix.

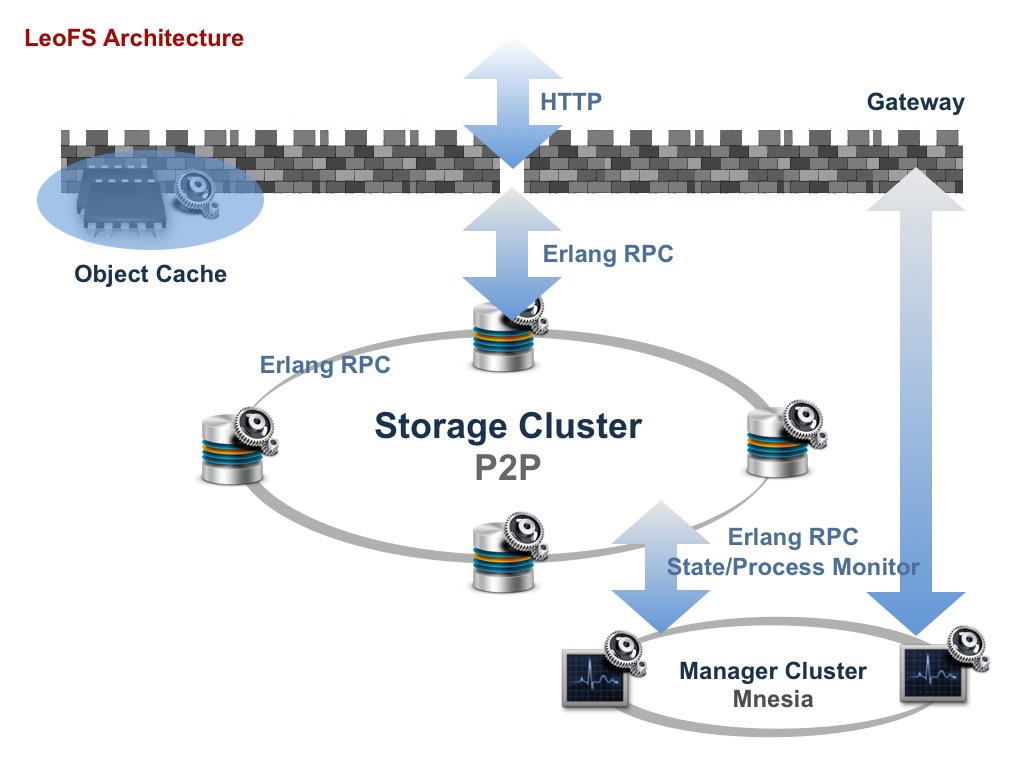

Inside LeoFS

Mutual function set of loosely connect with Erlang’s RPC as well as the internal storage-cluster.

LeoFS Gateway

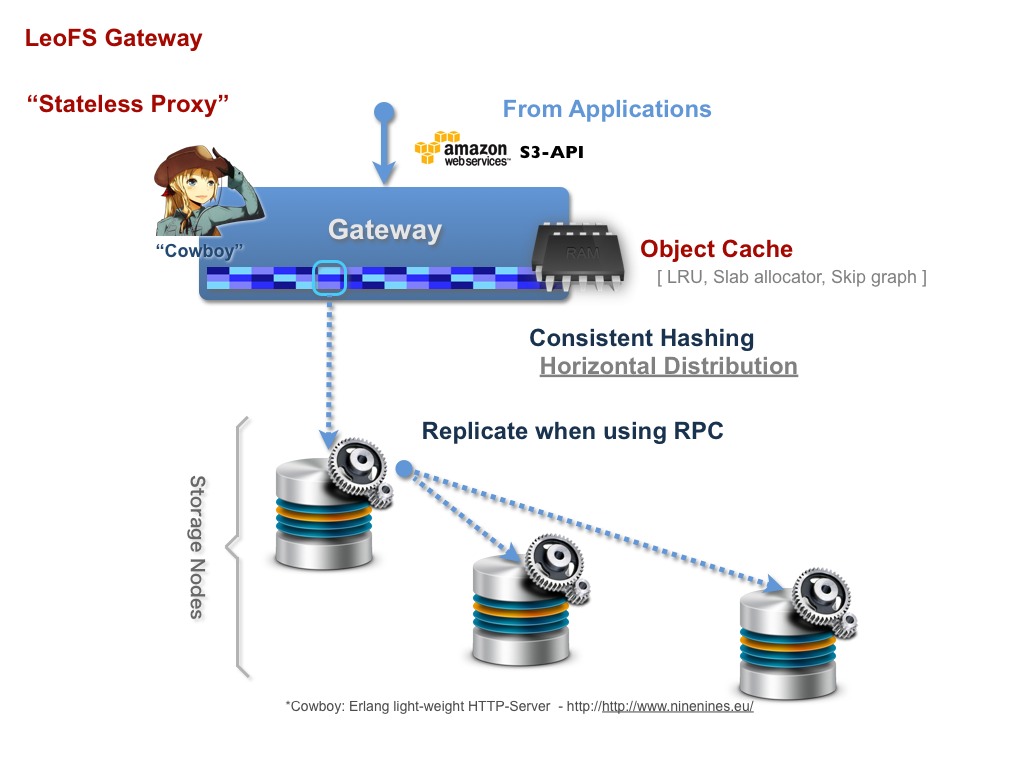

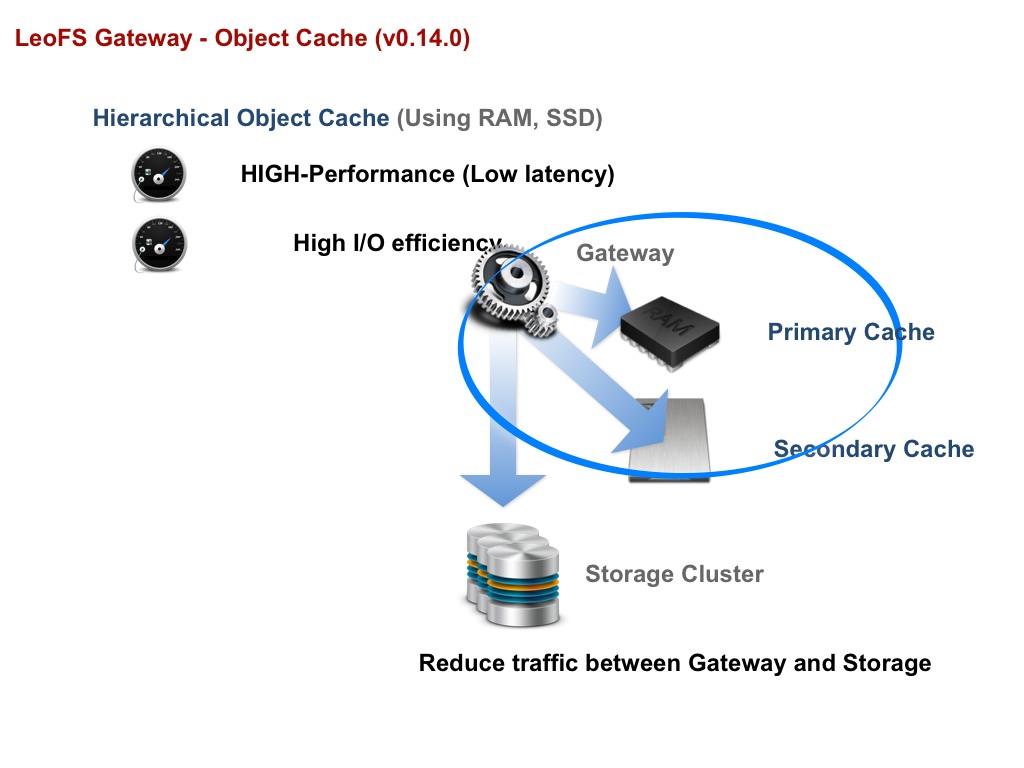

Gateway consists of Stateless Proxy and Object Cache. You can easily increase gateway-nodes during high-load. We chose Cowboy as Gateway’s HTTP-Server, because we expected HIGH-Performance.

It already provide RestAPI and S3-API. They are simpler APIs. it requests to a storage node when inquiring RING, which is based on “consistent-hashing”.

Also, Object-cache mechanism (hierarchical cache) realizes reduction of traffic between Gateway and Storage.

LeoFS Storage

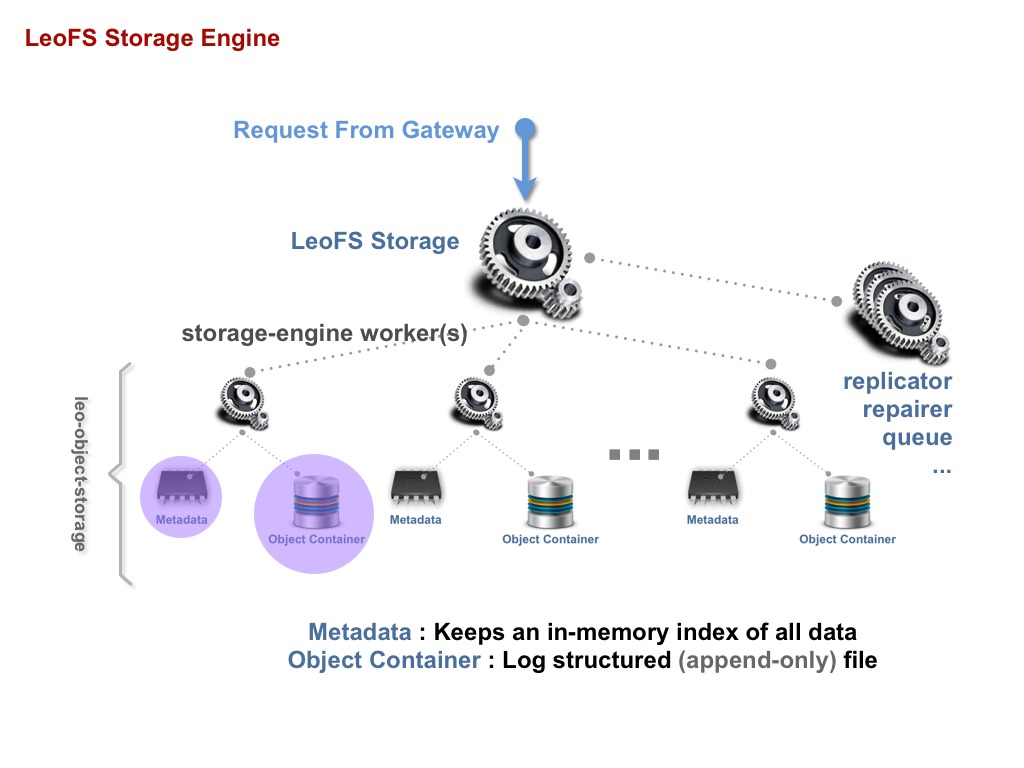

“LeoFS-Storage” consists of storage-engine, object-replicator, object-repairer, message queuing, and so on. Also, each storage-engine’s worker consists of metadata(s) and object-container(s) which is log-structured file.

A file (a raw data) is replicated to other nodes up to defined a number of replicas as well as metadata.

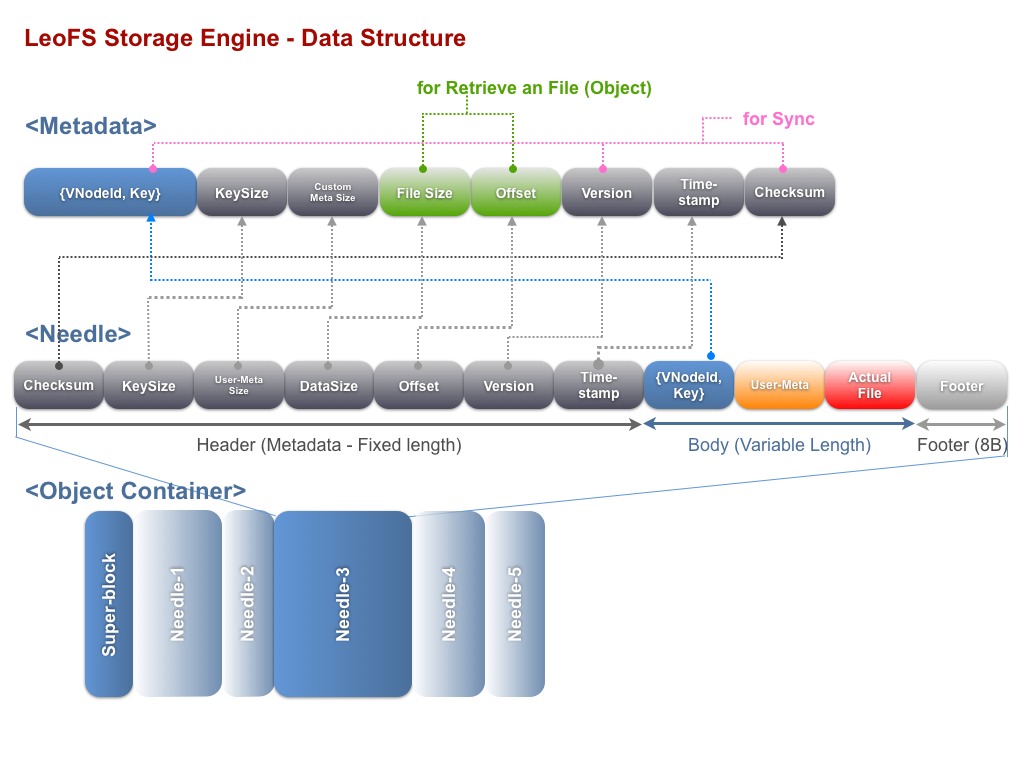

LeoFS’s data structure has 3-layers.

Metadata consists of filename, file-size, checksum, and so on. An actual object is retrieved with file-name, file-size and offset.

Needle is LeoFS’s original file format. A needle consists of metadata, an actual-file and footer. It’s able to recover metadata from a needle.

An object container consists of super-block and any needles.

- In case of retrieving an object from the storage:

- First, Storage engine retrieves a metadata from the metadata-storage

- Then retrieves an object from the object-container when using file-size and container’s offset

- In case of inserting an object into the storage:

- First, Storage engine inserts a metadata into the metadata-storage

- Then appends an object into the object storage container

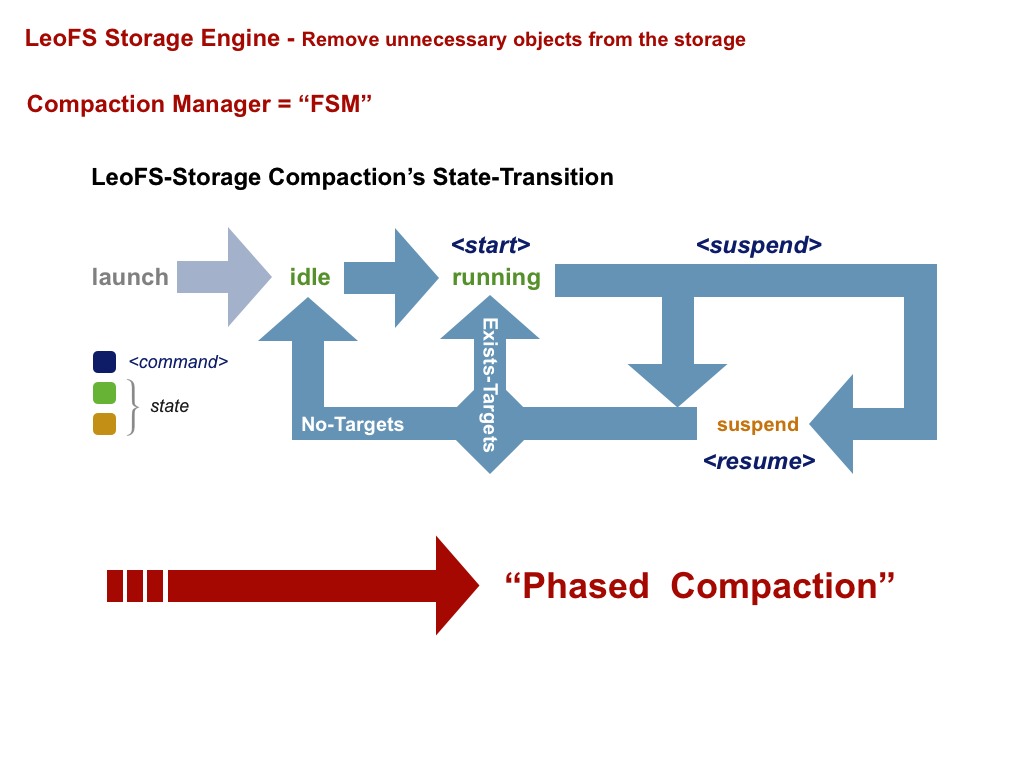

This mechanism occurs side-effects. Storage-Engines sometimes need to remove unnecessary objects as well as metadata. LeoFS has been taking a measure for less an effect of compaction, which is phased-compaciton. We’re planning to support auto-compaction with LeoFS v0.14.2.

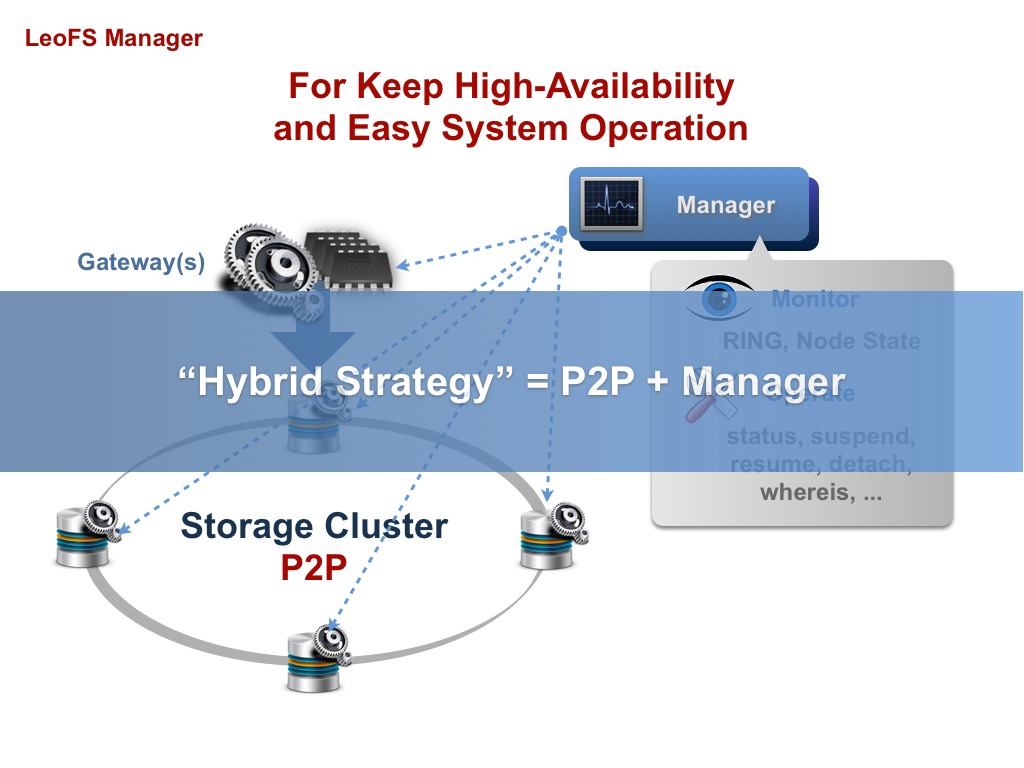

LeoFS Manager

Manager distributes “RING” and “Storage-cluster’s members”. It always monitors Node status and RING status, because LeoFS is required to realize High-Availability.

Also, Manager provides that it’s able to easily operation methods (suspend/resume/detach/whereis etc) for Gateway and Storage and provide RING to Gateway and Storage, so “LeoFS-manager” manages “RING”. If it found incorrect RING, it fixes RING’s consistency. If Storage found that, it notify that to Manager. Eventually, the problem is resolved by Manager.

Future Works

LeoFS-QoS

- QoS realizes 2 things:

- 1st, It’s able to control request from client to LeoFS during one-minute, QoS refuses requests from one of client when over defined a number of request threshold.

- 2nd, It’s able to store and see LeoFS’s traffic data for administrators.

OpenStack Integration

For we and you can build Cloud Platform (IaaS).

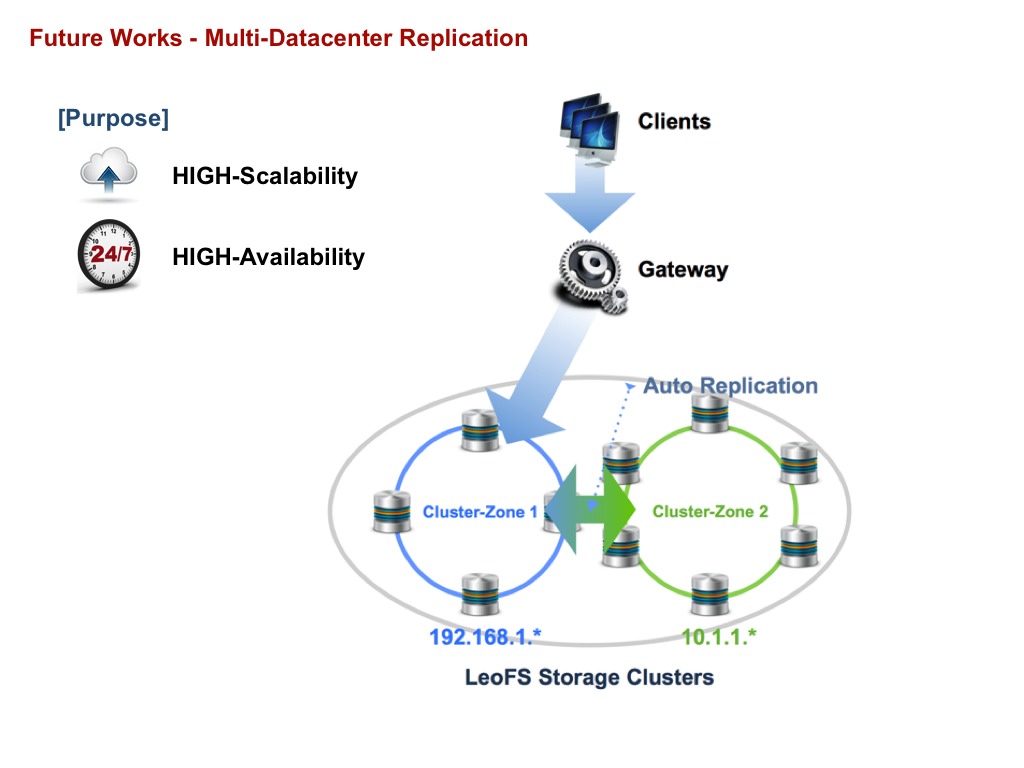

Multi-DC data replication

We expect more high-scalability and more High-availability

Wrap up

We keep improving and growing LeoFS. We will reach important 3-things - High Cost Performance Ratio, High Reliability, and High Scalability in this summer.

LeoFS is the powerful storage-system. I think when you have a chance to use it, you’ll agree that. If you have any questions, please contact us.