Quick Start LeoFS with Ruby-client

June 25, 2014Introduction

This article will get you going with a how to develop and architect Ruby application for LeoFS. This article assumes that you have already installed LeoFS environment on your local or remote node. See Getting Started with LeoFS for more Information.

Installation and Setup S3 Ruby-client

The easiest way to install Ruby on your machine is through the yum package installer. Then we need some additional Ruby dependancies.

For CentOS, Fedora and RHEL

##### Install Ruby, SDK and Dependencies #####

$ sudo yum install ruby

$ sudo yum install gcc g++ make automake autoconf curl-devel \

openssl-devel zlib-devel httpd-devel

$ sudo yum install apr-devel apr-util-devel sqlite-devel

$ sudo yum install ruby-rdoc ruby-devel

Once you have Ruby installed now you can easily install the ruby gems.

$ sudo yum install rubygems

For Debian and Ubuntu

##### Install Ruby, SDK and Dependencies #####

$ sudo apt-get install curl libmagic-dev

## To install rvm

$ curl -L https://get.rvm.io | bash -s stable

## To load RVM into your shell

$ source ~/.rvm/scripts/rvm

## To install dependancies

$ rvm requirements

$ rvm install ruby

$ rvm use ruby --default

Once you have Ruby installed now you can easily install the ruby gems.

$ rvm rubygems current

To check your current default interpreter, run the following:

$ ruby -v

###### Download Sample Project #####

$ git clone https://github.com/leo-project/leofs_client_tests.git

$ cd aws-sdk-ruby

$ gem install aws-sdk

$ gem install content_type

About the sample

This sample application is designed to show you how to:

- Declare a dependency on the aws-sdk using rubygemes.

- Read access keys from environment variables or define it statically in this sample we are using static entry.

- Instantiate an Amazon S3 client.

- Interact with Amazon S3 in various ways, such as creating a bucket and uploading a file.

The project's README file contains more information about this sample code. If you have trouble getting set up or have other feedback about this sample codes, let us know on GitHub.

API feature list

The storage API is compatible with the Amazon S3 REST API which means that any of the operations listed can be executed using any of the commonly available S3 libraries or tools.

Bucket-level operation

- GET Bucket - Returns a list of the objects within a bucket

- GET Bucket ACL - Returns the ACL associated with a bucket

- PUT Bucket - Creates a new bucket

- PUT Bucket ACL - Sets the ACL permissions for a bucket

- HEAD Object – Retrieves Bucket metadata

- DELETE Bucket - Deletes a bucket

Object-level operation

- GET Object- Retrieves an object

- LIST Object- Retrieves an object list

- PUT Object - Stores an object to a bucket

- PUT Object (Copy) - Creates a copy of an object

- HEAD Object - Retrieves object metadata (not the full content of the object)

- DELETE Object - Deletes an object

Multipart upload

- Initiate Multipart Upload - Initiates a multipart upload and returns an upload ID

- Upload Part - Uploads a part in a multipart upload

- Complete Multipart Upload - Completes a multipart upload and assembles previously uploaded parts

- Abort Multipart Upload - Aborts a multipart upload and eventually frees storage consumed by previously uploaded parts.

- List Parts - Lists the parts that have been uploaded for a specific multipart upload.

- List Multipart Uploads - Lists multipart uploads that have not yet been completed or aborted.

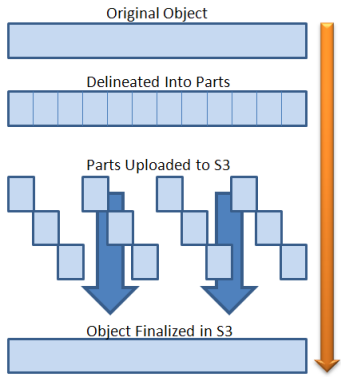

The multipart-upload allows you to upload a single object as a set of parts. Object parts can be uploaded independently and in any order. After all parts are uploaded, LeoFS assembles an object out of the parts. When your object size reaches 100MB, you should consider using multipart uploads instead of uploading the object in a single operation. Read more about parallel multipart uploads here.

Sample methods:

The complete API reference is available on the Aws::S3Interface. Here we included our sample script file which includes major method which is supported by LeoFS.

Creating a connection

A simple way to specify your credentials is by injecting them directly into the factory method when instantiating the client object. However, be careful to not hard-code your credentials inside of your applications. Hard-coding your credentials can be dangerous. According to your bucket Name please set sub-domain name entry as per this page. For more detail method you can refer aws-sdk-ruby/AWS#config-class_method.

## This code supports "aws-sdk v1.9.5"

require "aws-sdk"

require "content_type"

## Global variable setting

## AccessKeyID ==> replace your AccessKey ID,

## SecretAccessKey ==> replace your Secret AccessKey, base_

## Endpoint ==> your LeoFS service address,

## here given credential is LeoFS’s default user’s credential

Endpoint = "localhost"

Port = 8080

AccessKeyId = "05236"

SecretAccessKey = "802562235"

FileName = "testFile"

ChunkSize = 5 * 1024 * 1024 ## 5 MB chunk size

Bucket = "test" + rand(99999).to_s

# You can use dynamic configuration via specifying

# your environment into your shell

# Instantiate a new client for Amazon Simple Storage Service (S3).

# With no parameters or configuration,

# the AWS SDK for Ruby will look for access keys

# and region in these environment variables:

#

# AWS_ACCESS_KEY_ID='...'

# AWS_SECRET_ACCESS_KEY='...'

#

# LeoFS Http Handler creation

class LeoFSHandler < AWS::Core::Http::NetHttpHandler

def handle(request, response)

request.port = ::Port

super

end

end

## Credential and configuration's manual specification you can skip this step if you are using environmental variable

SP = AWS::Core::CredentialProviders::StaticProvider.new(

{

:access_key_id => AccessKeyId,

:secret_access_key => SecretAccessKey

})

AWS.config(

access_key_id: AccessKeyId,

secret_access_key: SecretAccessKey,

s3_endpoint: Endpoint,

http_handler: LeoFSHandler.new,

credential_provider: SP,

s3_force_path_style: true,

use_ssl: false

)

s3 = AWS::S3.new

Creating a bucket

A simple way to create bucket is given from here be careful bucket name should be globally unique and must be DNS compatible otherwise it will throw S3Exception. For more information about bucket name restrictions, see Bucket Restrictions and Limitations.

## Create bucket

s3.buckets.create(Bucket)

puts "Bucket Created Successfully\n"

Does bucket exists ?

A simple to check a bucket is exist or not and you have permission to access it. The operation returns a 200 - OK if the bucket exists and you have permission to access it. Otherwise, the operation might return responses such as 404 - Not Found and 403 - Forbidden. For more detail information you can refer AWS::S3::Bucket.

if !(s3.bucket.exists?)

raise "Bucket doesn't exists"

end

Get buckets

You can get array of all the buckets owned by your account using the buckets['bucket-name'] method. You can also enumerate all buckets in your account. For more detail information you can refer AWS::S3::BucketCollection.

## Get bucket

bucket = s3.buckets[Bucket]

puts "Get Bucket Successfully\n\n"

s3.buckets.each do |bucket|

puts bucket.name

end

Single-part object upload

A simple way to upload object via the single-part method from your file system which is recommended to use for object less than 100MB in size. For more detail information you can refer this page.

## PUT Single-Part upload Object

file_path = "../temp_data/" + FileName

fileObject = open(file_path)

## PUT object using single-part method

obj = bucket.objects[FileName + ".single"].write(file: file_path, content_type: fileObject.content_type)

Multi-part object upload

The multipart-upload allows you to upload a single object as a set of parts. Each part is a contiguous portion of the object's data. You can upload these object parts independently and in any order. If transmission of any part fails, you can retransmit that part without affecting other parts. After all parts of your object are uploaded, LeoFS assembles these parts and creates the object. In general, when your object size reaches 100 MB, you should consider using multipart uploads instead of uploading the object in a single operation. Advantages: Improved throughput, Quick recovery from any network issues, Suspend and resume object uploads begin an upload before you know the final object size. For more detail information you can refer AWS::S3::MultipartUpload.

## PUT object using multi-part method

puts "File is being upload:\n"

counter = fileObject.size / ChunkSize

uploading_object = bucket.objects[File.basename(fileObject.path)]

uploading_object.multipart_upload(:content_type => fileObject.content_type.to_s) do |upload|

while !fileObject.eof?

puts " #{upload.id} \t\t #{counter} "

counter -= 1

## 20MB Default size is 5MB(5242880Byte)

upload.add_part(fileObject.read ChunkSize)

p("Aborted") if upload.aborted?

end

end

puts "File Uploaded Successfully\n\n"

List a bucket’s content

Here we request an object iterator and loop over it to retrieve the desired information about the objects (object key, size, and modification time stamp in this case) . For more detail information you can refer AWS::S3::ObjectCollection.

## List objects in the bucket

bucket.objects.with_prefix("").each do |obj|

if !fileObject.size.eql? obj.content_length

raise " Content length is changed for : #{obj.key}"

end

puts "#{obj.key} \t #{obj.content_length}"

end

Head an object

Files in Amazon-S3 and LeoFS are called objects and are stored in buckets. A specific object is referred to by its key (i.e., name) and holds data. Here, we create a new object with the key name, HEAD request is metadata of that object.

e.g. ContentLength, ETag, ContentType etc.. For more detail information you can refer AWS::S3::S3Object.

## HEAD object

fileObject.seek(0)

fileDigest = Digest::MD5.hexdigest(fileObject.read)

metadata = bucket.objects[FileName + ".single"].head

## for future use && (fileObject.content_type.eql? metadata.content_type))

if !((fileObject.size.eql? metadata.content_length) && (fileDigest.eql? metadata.etag.gsub('"', '')))

raise "Single Part File Metadata could not match"

else

puts "Single Part File MetaData :"

p metadata

end

## for future use && (fileObject.content_type.eql? metadata.content_type)

metadata = bucket.objects[FileName].head

if !(fileObject.size.eql? metadata.content_length)

raise "Multipart File Metadata could not match"

else

puts "Multipart Part File MetaData :"

p metadata

end

GET an object

A simple way to retrieve object from LeoFS in to current directory by using read method. For more detail information you can refer AWS::S3::S3Object.

## Download File LeoFS to local disk

File.open(FileName + ".copy", "w+") do |thisfileObject|

bucket.objects[FileName].read do |chunk|

thisfileObject.write(chunk)

end

thisfileObject.seek(0)

thisfileDigest = Digest::MD5.hexdigest(thisfileObject.read)

if !((thisfileObject.size.eql? metadata.content_length) && (fileDigest.eql? thisfileDigest))

raise "Downloaded File Metadata could not match"

else

puts "\nFile Downloaded Successfully\n"

end

end

Copy an object

A simple way to copy object on LeoFS same bucket or different bucket We should use this method than by using the exists method. we are checking presence of copied object. Ruby SDK have two methods copy_from and copy_to. For more detail information you can refer AWS::S3::S3Object.

## Copy object

bucket.objects[FileName + ".copy"].copy_from(FileName)

if !bucket.objects[FileName + ".copy"].exists?

raise "File could not Copy Successfully\n"

end

puts "File copied successfully\n"

Move/Rename an object

A simple way to move/rename object on LeoFS same bucket or different bucket, we are checking presence of moved or renamed object. Ruby SDK have two methods move_to and rename_to. For more detail information you can refer AWS::S3::S3Object.

## Move object

obj = bucket.objects[FileName + ".copy"].move_to(FileName + ".org")

if !obj.exists?

raise "File could not Moved Successfully\n"

end

puts "\nFile move Successfully\n"

## Rename object

obj = bucket.objects[FileName + ".org"].rename_to(FileName + ".copy")

if !obj.exists?

raise "File could not Rename Successfully\n"

end

puts "\nFile rename Successfully\n"

Delete an object

A simple way to delete object from LeoFS by providing bucket and object name - key. Multiple object delete method currently not supported but you can perfrom similar operation via using iterator. For more detail information you can refer AWS::S3::S3Object.

## Delete objects one by one and check if exist

bucket.objects.with_prefix("").each do |obj|

obj.delete

if obj.exists?

raise "Object is not Deleted Successfully\n"

end

# to be not found

begin

obj.read

rescue AWS::S3::Errors::NoSuchKey

puts "#{obj.key} \t File Deleted Successfully..\n"

next

end

raise "Object is not Deleted Successfully\n"

end

Get a bucket ACL

A simple way to get bucket ACL is given here. LeoFS basically supports private, public-read and public-read-write types of the ACL. Object level ACL is currently not supported yet. In ruby SDK it displays like read,read_acp, write and full_control. here we are using permisions as collection of permissions as a array. For more detail information you can refer AWS::S3::S3Object.

puts "Owner ID : #{bucket.acl.owner.id}"

puts "Owner Display name : #{bucket.acl.owner.display_name}"

permissions = []

bucket.acl.grants.each do |grant|

puts "Bucket ACL is : #{grant.permission.name}"

puts "Bucket Grantee URI is : #{grant.grantee.uri}"

permissions << grant.permission.name

end

Put a bucket ACL

A simple way to put ACL and restrict different Bucket Acess by this method. For more detail information you can refer AWS::S3::S3Object.

bucket.acl = :private

# OR

bucket.acl = :public_read

# OR

bucket.acl = :public_read_write

# OR

bucket.acl = :private

Delete a bucket

A simple way to delete bucket using clear and delete methods. For more detail information you can refer this page.

## Bucket Delete

bucket = s3.buckets[Bucket]

bucket.clear! #clear the versions only

bucket.delete

puts "Bucket deleted Successfully\n"

Test script code:

This testing file include all well know methods of Ruby SDK. This script required sample file name as “testFile” at following location in $file_path = "../temp_data/$file_name"; your project Directory. Sample Operation testing Script which is located in downloaded project’s leo.rb file or you can access here.

Test script output :

You can check sample output of this script via LeoFS_Ruby_Client_Testing_Script_Result - Gist.

comments powered by Disqus