LeoStorage Settings¶

Prior Knowledge¶

Note: Configuration

LeoStorage's features depend on its configuration. If once a LeoFS system is launched, you cannot modify the following LeoStorage's configurations because the algorithm of the data operation strictly adheres to the settings.

Irrevocable and Attention Required Items:¶

| Item | Irrevocable? | Description |

|---|---|---|

| LeoStorage Basic | ||

obj_containers.path |

Modifiable with condition | Able to change the directory of the container(s) but not able to add or remove the directory(s). You need to move the data files which are <obj_containers.path>/avs/object and <obj_containers.path>/avs/metadata, which adhere to this configuration. |

obj_containers.num_of_containers |

Yes | Not able to change the configuration because LeoStorage cannot retrieve objects or metadatas. If you want to modify this setting in order to add additional disk volumes for LeoFS then follow the instruction here3. |

obj_containers.metadata_storage |

Yes | As above |

num_of_vnodes |

Yes | As above |

| MQ | ||

mq.backend_db |

Modifiable with condition | Lose all the MQ's data after changing |

mq.num_of_mq_procs |

Modifiable with condition | As above |

| Replication and Recovery object(s) | ||

replication.rack_awareness.rack_id |

Yes | Not able to change the configuration because LeoFS cannot retrieve objects or metadatas. |

| Other Directories Settings | ||

queue_dir |

Modifiable with condition | Able to change the MQ's directory but you need to move the MQ's data, which adhere to this configuration. |

Other Configurations¶

If you want to modify settings like where to place leo_storage.conf, what user is starting a LeoStorage process and so on, refer For Administrators / Settings / Environment Configuration for more information.

Configuration¶

LeoStorage Configurations¶

| Item | Description |

|---|---|

| LeoManager Nodes | |

managers |

Name of LeoManager nodes. This configuration is necessary for communicating with LeoManager's master and LeoManager's slave.( Default: [[email protected], [email protected]] ) |

| LeoStorage Basic | |

obj_containers.path |

Directories of object-containers ( Default: [./avs] ) |

obj_containers.num_of_containers |

A number of object-containers of each directory. As backend_db.eleveldb.write_buf_size * obj_containers.num_of_containers memory can be consumed in total, take both into account to meet with your memory footprint requirements on LeoStorage. ( Default: [8] ) |

obj_containers.sync_mode |

Mode of the data synchronization. There're three modes:

( Default: none ) |

obj_containers.sync_interval_in_ms |

Interval in ms of the data synchronization ( Default: 1000, Unit: |

obj_containers.metadata_storage |

The metadata storage feature is pluggable which depends on bitcask and leveldb. ( Default: leveldb ) |

num_of_vnodes |

The total number of virtual-nodes of a LeoStorage node for generating the distributed hashtable, RING ( Default: 168 ) |

object_storage.is_strict_check |

Enable strict check between checksum of a metadata and checksum of an object. ( Default: false ) |

object_storage.threshold_of_slow_processing |

Threshold of slow processing ( Default: 1000, Unit: |

seeking_timeout_per_metadata |

Timeout of seeking metadatas per a metadata ( Default: 10, Unit: |

max_num_of_procs |

Maximum number of processes for both write and read operation ( Default: 3000 ) |

num_of_obj_storage_read_procs |

Total number of obj-storage-read processes per object-container, AVS

( Default: 3 ) |

| Watchdog | |

watchdog.common.loosen_control_at_safe_count |

When reach a number of safe (clear watchdog), a watchdog loosen the control ( Default: 1 ) |

| Watchdog / REX | |

watchdog.rex.is_enabled |

Enables or disables the rex-watchdog which monitors the memory usage of Erlang's RPC component. ( Default: true ) |

watchdog.rex.interval |

An interval of executing the watchdog processing ( Default: 10, Unit: |

watchdog.rex.threshold_mem_capacity |

Threshold of memory capacity of binary for Erlang rex ( Default: 33554432, Unit: |

| Watchdog / CPU | |

watchdog.cpu.is_enabled |

Enables or disables the CPU-watchdog which monitors both CPU load average and CPU utilization ( Default: false ) |

watchdog.cpu.raised_error_times |

Times of raising error to a client ( Default: 5 ) |

watchdog.cpu.interval |

An interval of executing the watchdog processing ( Default: 10, Unit: |

watchdog.cpu.threshold_cpu_load_avg |

Threshold of CPU load average ( Default: 5.0 ) |

watchdog.cpu.threshold_cpu_util |

Threshold of CPU utilization ( Default: 100 ) |

| Watchdog / DISK | |

watchdog.disk.is_enabled |

Enables or disables the ( Default: false ) |

watchdog.disk.raised_error_times |

Times of raising error to a client ( Default: 5 ) |

watchdog.disk.interval |

An interval of executing the watchdog processing ( Default: 10, Unit: |

watchdog.disk.threshold_disk_use |

Threshold of Disk use(%) of a target disk's capacity ( Default: 85, Unit: |

watchdog.disk.threshold_disk_util |

Threshold of Disk utilization ( Default: 90, Unit: |

watchdog.disk.threshold_disk_rkb |

Threshold of disk read KB/sec ( Default: 98304, Unit: |

watchdog.disk.threshold_disk_wkb |

Threshold of disk write KB/sec ( Default: 98304, Unit: |

watchdog.disk.target_devices |

Target devices for checking disk utilization ( Default: [] ) |

| Watchdog / CLUSTER | |

watchdog.cluster.is_enabled |

Enables or disables the ( Default: false ) |

watchdog.cluster.interval |

An interval of executing the watchdog processing ( Default: 10 ) |

| Watchdog / ERRORS | |

watchdog.error.is_enabled |

Enables or disables the ( Default: false ) |

watchdog.error.interval |

An interval of executing the watchdog processing ( Default: 60 ) |

watchdog.error.threshold_count |

Total counts of raising error to a client ( Default: 100 ) |

| Data Compaction | |

| Data Compaction / Basic | |

compaction.limit_num_of_compaction_procs |

Limit of a number of procs to execute data-compaction in parallel ( Default: 4 ) |

compaction.skip_prefetch_size |

Perfetch size when skipping garbage ( Default: 512 ) |

compaction.waiting_time_regular |

Regular value of compaction-proc waiting time/batch-proc ( Default: 500, Unit: |

compaction.waiting_time_max |

Maximum value of compaction-proc waiting time/batch-proc ( Default: 3000, Unit: |

compaction.batch_procs_regular |

Total number of regular compaction batch processes ( Default: 1000 ) |

compaction.batch_procs_max |

Maximum number of compaction batch processes ( Default: 1500 ) |

| Data Compaction / Automated Data Compaction | |

autonomic_op.compaction.is_enabled |

Enables or disables the auto-compaction ( Default: false ) |

autonomic_op.compaction.parallel_procs |

Total number of parallel processes ( Default: 1 ) |

autonomic_op.compaction.interval |

An interval time of between auto-comcations ( Default: 3600, Unit: |

autonomic_op.compaction.warn_active_size_ratio |

Warning ratio of active size ( Default: 70, Unit: |

autonomic_op.compaction.threshold_active_size_ratio |

Threshold ratio of active size. LeoStorage start data-comaction after reaching it ( Default: 60, |

| MQ | |

mq.backend_db |

The MQ storage feature is pluggable which depends on bitcask and leveldb. ( Default: leveldb ) |

mq.num_of_mq_procs |

A number of mq-server's processes ( Default: 8 ) |

mq.num_of_batch_process_max |

Maximum number of bach processes of message ( Default: 3000 ) |

mq.num_of_batch_process_regular |

Regular value of bach processes of message ( Default: 1600 ) |

mq.interval_between_batch_procs_max |

Maximum value of interval between batch-procs ( Default: 3000, Unit: |

mq.interval_between_batch_procs_regular |

Regular value of interval between batch-procs ( Default: 500, Unit: |

| Backend DB / eleveldb | |

backend_db.eleveldb.write_buf_size |

Write Buffer Size. Larger values increase performance, especially during bulk loads. Up to two write buffers may be held in memory at the same time, so you may wish to adjust this parameter to control memory usage.Also, a larger write buffer will result in a longer recovery time the next time the database is opened. As backend_db.eleveldb.write_buf_size * obj_containers.num_of_containers memory can be consumed in total, take both into account to meet with your memory footprint requirements on LeoStorage. ( Default: 62914560 ) |

backend_db.eleveldb.max_open_files |

Max Open Files. Number of open files that can be used by the DB. You may need to increase this if your database has a large working set (budget one open file per 2MB of working set). ( Default: 1000 ) |

backend_db.eleveldb.sst_block_size |

The size of a data block is controlled by the SST block size. The size represents a threshold, not a fixed count. Whenever a newly created block reaches this uncompressed size, leveldb considers it full and writes the block with its metadata to disk. The number of keys contained in the block depends upon the size of the values and keys. ( Default: 4096 ) |

| Replication and Recovery object(s) | |

replication.rack_awareness.rack_id |

Rack-Id for the rack-awareness replica placement feature |

replication.recovery.size_of_stacked_objs |

Size of stacked objects. Objects are stacked to send as a bulked object to remote nodes. ( Default: 5242880, Unit: |

replication.recovery.stacking_timeout |

Stacking timeout. A bulked object are sent to a remote node after reaching the timeout. ( Default: 1, Unit: |

| Multi Data Center Replication / Basic | |

mdc_replication.size_of_stacked_objs |

Size of stacked objects. Objects are stacked to send as a bulked object to a remote cluster. ( Default: 33554432, Unit: |

mdc_replication.stacking_timeout |

Stacking timeout. A bulked object are sent to a remote cluster after reaching the timeout. ( Default: 30, Unit: |

mdc_replication.req_timeout |

Request timeout between clusters ( Default: 30000, Unit: |

| Log | |

log.log_level |

Log level:

( Default: 1 ) |

log.is_enable_access_log |

Enables or disables the access-log feature ( Default: false ) |

log.access_log_level |

Access log's level:

( Default: 0 ) |

log.erlang |

Destination of log file(s) of Erlang's log ( Default: ./log/erlang ) |

log.app |

Destination of log file(s) of LeoStorage ( Default: ./log/app ) |

log.member_dir |

Destination of log file(s) of members of storage-cluster ( Default: ./log/ring ) |

log.ring_dir |

Destination of log file(s) of RING ( Default: ./log/ring ) |

log.is_enable_diagnosis_log |

Destination of data-diagnosis log(s) ( Default: true ) |

| Other Directories Settings | |

queue_dir |

Directory of queue for monitoring "RING" ( Default: ./work/queue ) |

snmp_agent |

Directory of SNMP agent configuration ( Default: ./snmp/snmpa_storage_0/LEO-STORAGE ) |

Erlang VM's Related Configurations¶

| Item | Description |

|---|---|

nodename |

The format of the node name is <NAME>@<IP-ADDRESS>, which must be unique always in a LeoFS system( Default: [email protected] ) |

distributed_cookie |

Sets the magic cookie of the node to Cookie. - See also: Distributed Erlang ( Default: 401321b4 ) |

erlang.kernel_poll |

Kernel poll reduces LeoFS' CPU usage when it has hundreds (or more) network connections. ( Default: true ) |

erlang.asyc_threads |

The total number of Erlang aynch threads ( Default: 32 ) |

erlang.max_ports |

The max_ports sets the default value of maximum number of ports. - See also: Erlang erlang:open_port/2 ( Default: 64000 ) |

erlang.crash_dump |

The output destination of an Erlang crash dump ( Default: ./log/erl_crash.dump ) |

erlang.max_ets_tables |

The maxinum number of Erlagn ETS tables ( Default: 256000 ) |

erlang.smp |

-smp enable and -smp start the Erlang runtime system with SMP support enabled.( Default: enable ) |

erlang.schedulers.compaction_of_load |

Enables or disables scheduler compaction of load. If it's enabled, the Erlang VM will attempt to fully load as many scheduler threads as mush as possible. ( Default: true ) |

erlang.schedulers.utilization_balancing |

Enables or disables scheduler utilization balancing of load. By default scheduler utilization balancing is disabled and instead scheduler compaction of load is enabled, which strives for a load distribution that causes as many scheduler threads as possible to be fully loaded (that is, not run out of work). ( Default: false ) |

erlang.distribution_buffer_size |

Sender-side network distribution buffer size (unit: KB) ( Default: 32768 ) |

erlang.fullsweep_after |

Option fullsweep_after makes it possible to specify the maximum number of generational collections before forcing a fullsweep, even if there is room on the old heap. Setting the number to zero disables the general collection algorithm, that is, all live data is copied at every garbage collection. ( Default: 0 ) |

erlang.secio |

Enables or disables eager check I/O scheduling. The flag effects when schedulers will check for I/O operations possible to execute, and when such I/O operations will execute. ( Default: true ) |

process_limit |

The maxinum number of Erlang processes. Sets the maximum number of simultaneously existing processes for this system if a Number is passed as value. Valid range for Number is [1024-134217727] ( Default: 1048576 ) |

Notes and Tips of the Configuration¶

obj_containers.path, obj_containers.num_of_containers¶

You can configure plural object containers with comma separated value of obj_containers.path and obj_containers.num_of_containers.

1 2 | obj_containers.path = [/var/leofs/avs/1, /var/leofs/avs/2] obj_containers.num_of_containers = [32, 64] |

object_storage.is_strict_check¶

Without setting object_storage.is_strict_check to true, there is a little possibility your data could be broken without any caution even if a LeoFS system is running on a filesystem like ZFS1 that protect both the metadata and the data blocks through the checksum when bugs of any unexpected or unknown software got AVS files broken.

Configuration which can affect Load and CPU usage¶

mq.num_of_mq_procs can affect not only the performance/load during recover/rebalance operations but the load while there is at least one node suspended/downed in the cluster. so that setting mq.num_of_mq_procs to an appropriate value based on the amount of expected traffic and hardware specs is really important. This section would give you the brief understanding on mq.num_of_mq_procs and how to choose the optimal value for your requirements.

-

How the

mq.num_of_mq_procssetting affect the system operations- High

- Fast recover/rebalance time

- High CPU/Load on storage during recover/rebalance and also existing suspended/stopped nodes in the cluster

- Low

- Slow recover/rebalance time

- Low CPU/Load on storage during recover/rebalance and also existing suspended/stopped nodes in the cluster

- High

-

Recommend settings

- If you have enough CPU resources on storage nodes then set it to a higher one as long as it doesn't affect the operations coming from LeoGateway

- If you don't then set it to somewhat a lower one unless the recover take too much time

For more details, Please see Issue #9872.

Configuration related to MQ¶

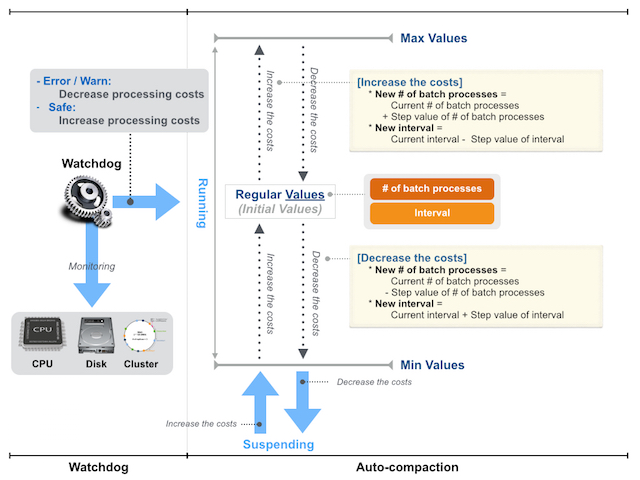

LeoStorage's MQ mechanism depends on the watchdog mechanism to reduce costs of a message consumption. The MQ dynamically updates a number of batch processes and an interval of a message consumption.

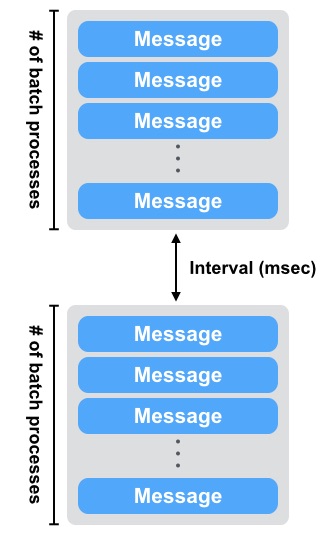

Figure: Number-of-batch-processes and interval:

As of Figure: Relationship of Watchdog and MQ, the watchdog can automatically adjust a value of a number of batch processes between mq.num_of_batch_process_min and mq.num_of_batch_process_max, which is increased or decreased with mq.num_of_batch_process_step.

On the other hands, a value of an interval is adjusted between mq.interval_between_batch_procs_min and mq.interval_between_batch_procs_max, which is increased or decreased with mq.interval_between_batch_procs_step.

When the each value reached the min value, the MQ changes the status to suspending, after that the node’s processing costs is changed to low, the MQ updates the status to running, again.

Configuration related to the auto-compaction¶

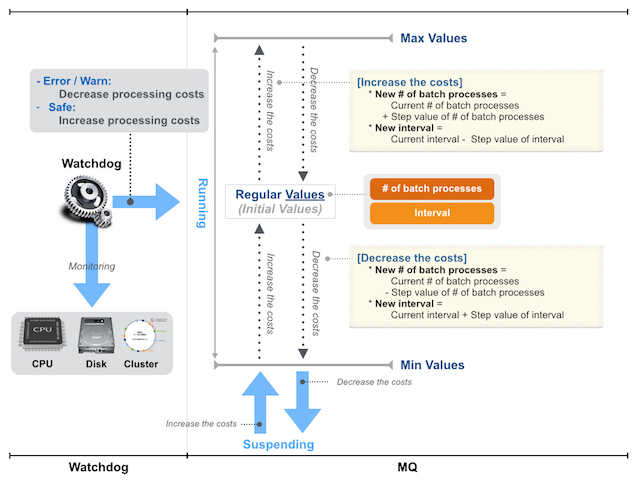

LeoStorage's auto-compaction mechanism also depends on the watchdog mechanism to reduce costs of processing. The Auto-compaction can dynamically update a number of batch processes and an interval of a processing of seeking an object. The basic design of the relationship with the watchdog is similar to the MQ.

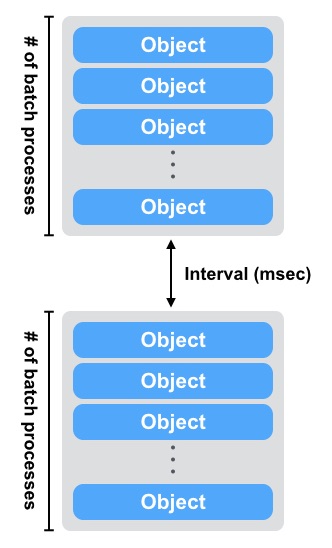

Figure: Number-of-batch-processes and interval

As of Figure: Relationship of the watchdog and the auto-compaction, the watchdog automatically adjusts the value of a number of batch processes between compaction.batch_procs_min and compaction.batch_procs_max, which is increased or decreased with compaction.batch_procs_step.

On the other hand, the value of an interval is adjusted between compaction.waiting_time_min and compaction.waiting_time_max, which is increased or decreased with compaction.waiting_time_step.

When the each value reached the min value, the auto-compaction changes the status to suspending, after that the node’s processing costs is changed to low, the auto-compaction updates the status to running, again.

Figure: Relationship of the watchdog and the auto-compaction